We previously wrote about our work on deep neural networks for speech enhancement. In late August, we presented our newest results as a paper and a poster at the speech technology conference Interspeech 2017 in Stockholm, Sweden.

Speech enhancement with deep neural networks

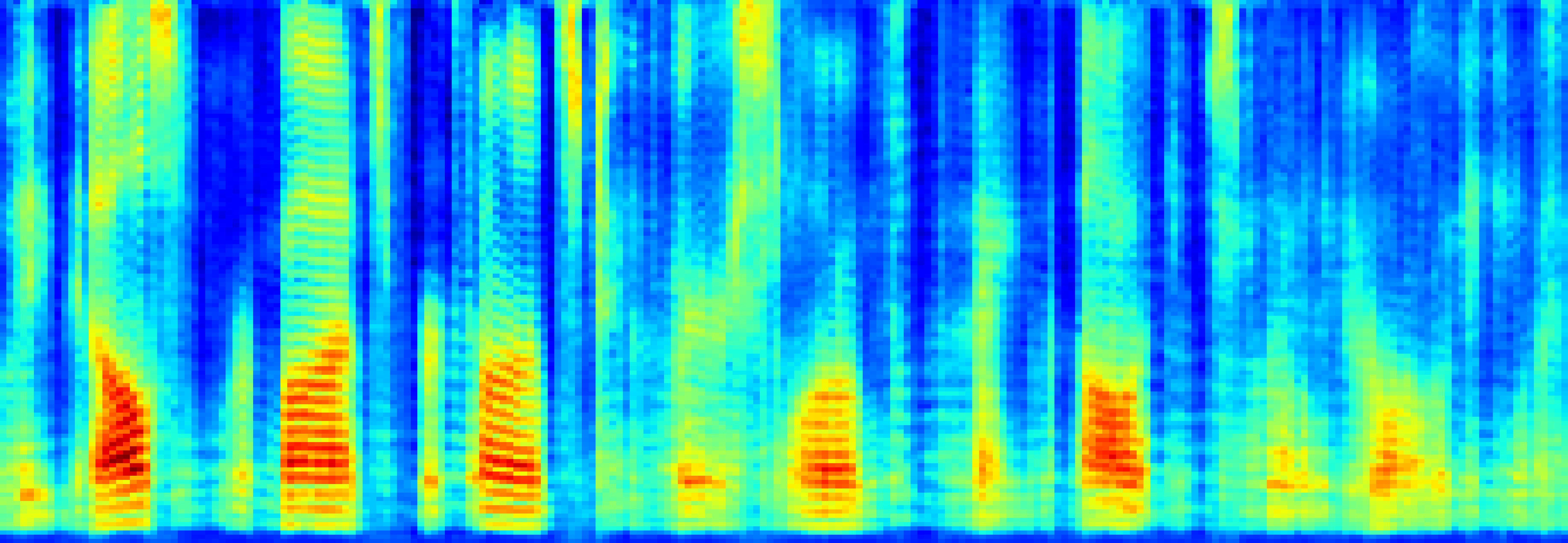

One of the goals of the field of speech enhancement is to remove unwanted noise. That is to say, to take speech signals corrupted by noise and remove the noise, leaving clean speech. This is particularly useful for speech recorded in a noisy place, for example a sidewalk next to traffic, or in a noisy cantina. For example, if we could remove background noise from a phone conversation, the people conversing could understand each other better.

Deep neural networks (DNNs) represent one of the most promising approaches for doing this. A DNN is a trainable algorithm that we can apply to many different problems. You can find more details on this in our previous post. However, training DNNs is not straightforward. There are many ways to construct the network, and many training parameters to choose from. The common approach is to train a lot of different networks and test them against each other to find out which way works best.

Testing the system

However, it is not easy to test how the DNNs enhance speech intelligibility and quality. The ‘correct’ way to do it is of course to have people listen to and evaluate the results. Unfortunately, such subjective evaluations are time-consuming, and we cannot do one for every DNN.

Therefore, most researchers working on DNN-based speech enhancement use objective measures. These are mathematical functions that can estimate speech intelligibility and/or quality similarly to human listeners. These measures typically do this by comparing a reference signal with a modified signal. The reference signal is typically perfectly clean speech, and the modified signal can be speech with added noise. Thus, objective measures can evaluate the intelligibility or quality of a noisy speech signal before and after ‘cleaning’ it with the DNN. The DNN that improves the objective measures the most will hopefully also work best for human listeners.

Our work

One of the most commonly used objective measures for intelligibility is the short-time objective intelligibility (STOI) measure. Its authors found that it predicts intelligibility to humans well for a number of cases. STOI is also popular for other reasons: A reference implementation is available, and anyone can freely use STOI unlike some other measures that require expensive licences. However, STOI does not predict intelligibility well in all cases. Therefore, we wanted to test whether it also correctly predicts how DNN speech enhancement improves intelligibility.

To do that, we trained a DNN for speech enhancement as described in our previous post and tested whether it improves intelligibility, using both STOI and human subjects. We presented our test subjects with random spoken sentences in noise, and asked them to pick out the words. While STOI predicted that the DNN speech enhancement system improves intelligibility slightly, our test subjects uniformly found that it actually significantly reduces intelligibility.

Therefore, we advise against just using STOI to evaluate whether a DNN speech enhancement system is well trained. The same advice goes for all other objective measures that have not yet been tested for this case.

Would you like to know more?

You can download our conference poster and our conference paper at ResearchGate. If you want to hear how the noisy speech sounds before and after speech enhancement, you can hear some sound examples here.