We previously wrote about our work on deep neural networks for speech enhancement. In late August, we presented our newest results as a paper and a poster at the speech technology conference Interspeech 2017 in Stockholm, Sweden.

speech enhancement

Speech Enhancement with Deep Learning

Using deep learning to improve the intelligibility of noise-corrupted speech signals

Speech is key to our ability to communicate. We already use recorded speech to communicate remotely with other humans and we will get more and more used to machines that simply ‘listen’ to us. However, we want our phones, laptops, hearing aids, voice controlled and/or Internet of Things (IoT) devices to work in every environment — the majority of environments being noisy.

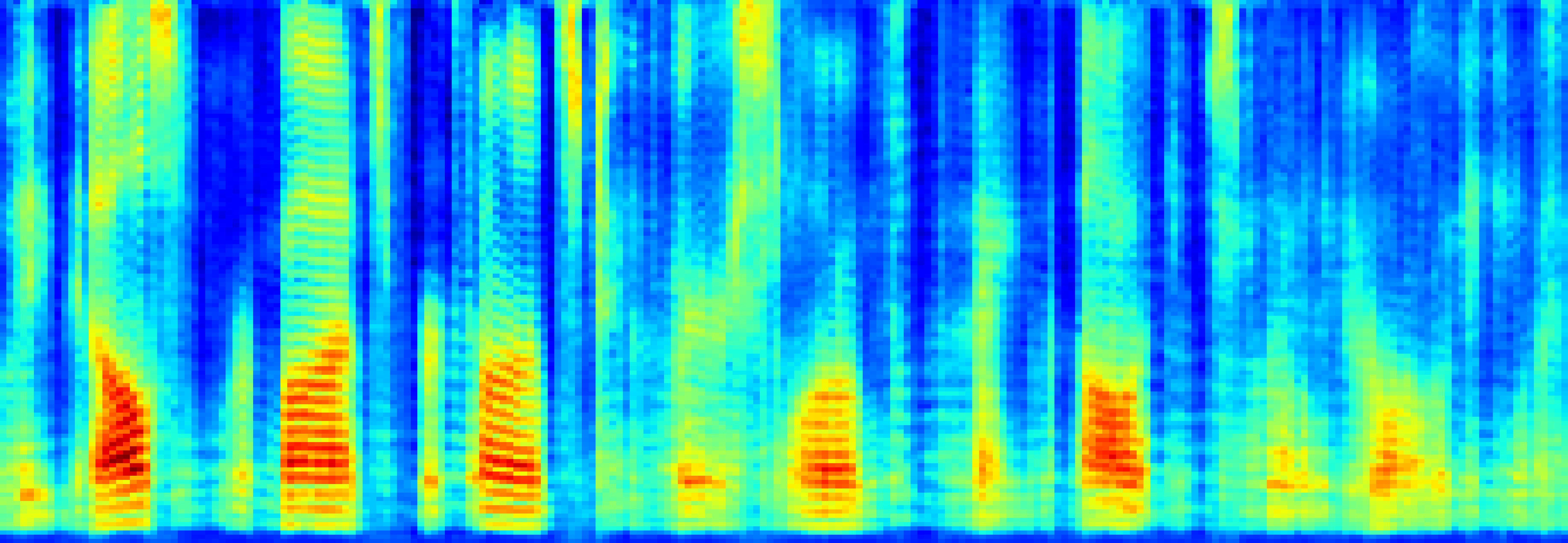

This creates the need for speech enhancement techniques that remove noise from recorded speech signals. Yet, as of today, there are no noise-filtering strategies that significantly help people understand single-channel noisy speech, and even state-of-the-art voice assistants fail miserably in noisy environments. Some recent publications on speech enhancement show that deep learning, a machine learning subfield based on deep neural networks (DNNs), will become a game-changer in the field of speech enhancement. See for example reference [1] below.

In this blog post we will go through a relatively simple implementation of Deep Learning to speech enhancement. Scroll down to the end of this post if you just want to know what the resulting enhanced samples can sound like.