Using deep learning to improve the intelligibility of noise-corrupted speech signals

Speech is key to our ability to communicate. We already use recorded speech to communicate remotely with other humans and we will get more and more used to machines that simply ‘listen’ to us. However, we want our phones, laptops, hearing aids, voice controlled and/or Internet of Things (IoT) devices to work in every environment — the majority of environments being noisy.

This creates the need for speech enhancement techniques that remove noise from recorded speech signals. Yet, as of today, there are no noise-filtering strategies that significantly help people understand single-channel noisy speech, and even state-of-the-art voice assistants fail miserably in noisy environments. Some recent publications on speech enhancement show that deep learning, a machine learning subfield based on deep neural networks (DNNs), will become a game-changer in the field of speech enhancement. See for example reference [1] below.

In this blog post we will go through a relatively simple implementation of Deep Learning to speech enhancement. Scroll down to the end of this post if you just want to know what the resulting enhanced samples can sound like.

Pre-processing

For simplicity’s sake, we will treat the Deep Neural Network as a black box that takes an input vector x and returns an output vector y. As we want the network to clean noisy speech samples, x will represent noisy speech, while y represents the enhanced speech.

A recording, like the 4.3 s long noisy sentence below, is in essence already a vector of sequential samples, and therefore could directly be used as input to our DNN. However, even though this sentence was recorded with a rather low sample frequency of 8000 Hz, the entire input vector would be massive: 4.3 s x 8000 Hz = 34 400 samples long. Such a long input vector would make the DNN very complex, which would make it problematic to train.

Of course, we could only use part as the sentence as input, and as such, make the input vector arbitrarily short. In the extreme case, we could even use just one sample as input. However, one sample is just one number, and one number wouldn’t give our DNN any information on whether this sample represents noise, speech or both. Consequently, it will have no chance of actually improving the signal.

Instead, we need to present our noisy speech to the DNN in the shortest possible form that contains a maximum amount of relevant information to act on. Here it makes sense to borrow from what we know about our own hearing, because that will implicitly guide the DNN to improve those aspects that matter most to our own ears. This is especially true when we’re enhancing for human listeners, but this approach has also been shown to work when the DNN’s output is intended for machine interpretation.

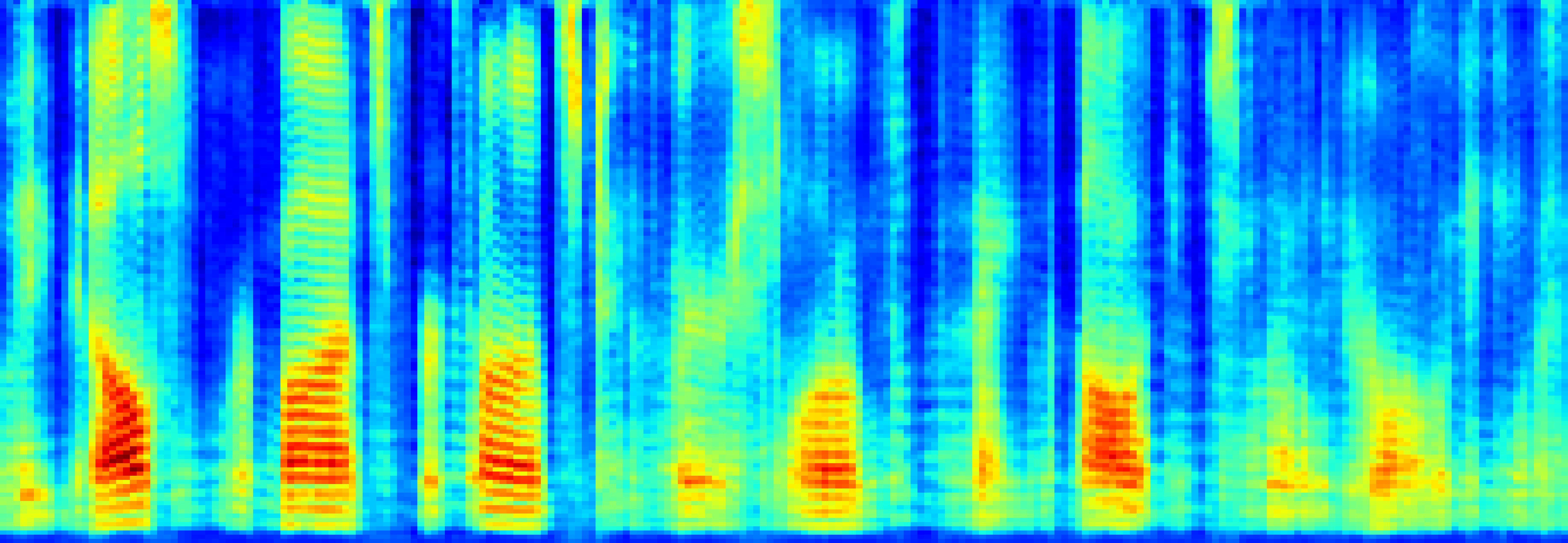

Sound that enters our ears eventually activates hair cells that are sensitive to specific frequencies. We can very roughly mimic this by switching to the frequency domain. We take a standard Fourier approach to this as shown below:

At this point, our signal is represented by a complex FFT array whose magnitude and phase values can be used as input to the DNN. However, so far, we seem to have only increased the size of the input to our DNN.

So an important next step is to ignore the phase vector. By ignoring, we mean that we will not feed it to the DNN. Instead, the DNN will be trained to optimise the magnitude vector only, and this resulting magnitude will be combined with noisy phase from the original signal. This introduces a limit to how good our system can become.

The following listening examples are created by combining an impeccably clean magnitude (the hypothetical result of a perfect DNN) with a noisy phase. We can hear that the damaging effect of a noisy phase depends on the total Signal to Noise Ratio (SNR). Once the SNR gets lower, the result becomes audibly worse. However, even at low SNRs, the enhanced results are clearly more intelligible than the noisy signal, which is an achievement worth aiming for.

| SNR [dB] | Noisy magnitude and noisy phase | Clean magnitude, but noisy phase |

|---|---|---|

| 15 | ||

| 5 | ||

| -5 |

The above samples provide evidence for the common speech enhancement notion that magnitude is more important than phase. By ignoring phase, we ensure that our DNN focusses on that which is perceptually most important. Another trick to make our DNN input more perceptually relevant is by taking the log power of our magnitude spectrum, to mimic the fact that our is exponentially sensitive to changes in volume (a doubling in sound energy does not sound twice as loud to our ears).

Now that we have our input vector ready, it is time to look at what we want to get out of our DNN. We will namely train the DNN in a so-called ‘supervised’ manner. For this, we need a dataset containing lots of noisy speech signals, and for each of these signals, we need a clean version to provide the DNN with a target. The combined dataset is called the ‘training set’. For the learning process to be effective, the training set should be large and cover as many different noise types, speakers, dialects and utterings as possible.

We want our DNN to produce one window of enhanced speech from an input that is several windows long, to include a bit of context that should help the DNN decide on what to do with the window it is supposed to clean. Having it output more than one window would mean that it most likely will process sequential windows fundamentally differently, meaning we’d get different results depending on where we start in an audio segment.

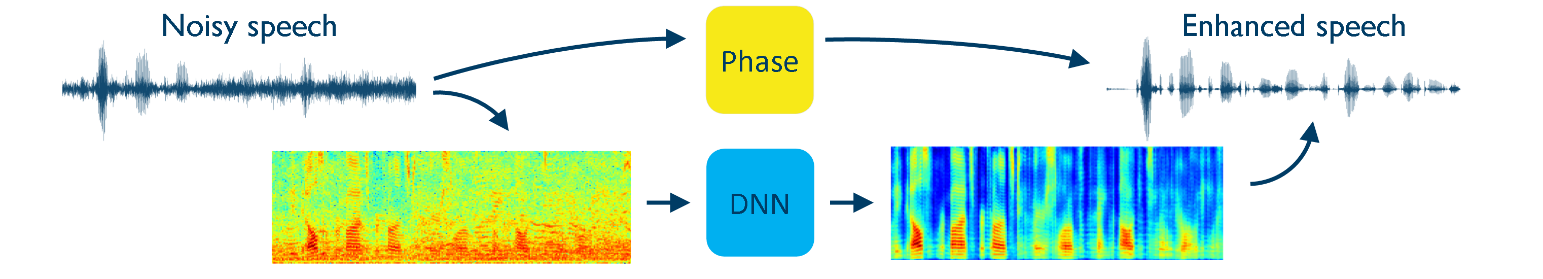

The figure below shows the complete pre-processing stage.

Training the DNN

We choose a rather standard type of DNN with a fancy name: the multilayer perceptron. We will simply treat it as a function that is complex enough so that it can be taught to represent the mapping between clean and noisy speech.

Training our DNN really means that we want it to minimize a function called the ‘loss function’. The loss function represent the difference between what you want to achieve and what the DNN is actually doing. Going into the detailed workings of how the loss function is minimised by repeatedly feeding the DNN with data is well beyond the scope of this blog. For simplicity’s sake, we will just accept for now that the loss function guides the training of the DNN – it tells the DNN what we expect from it.

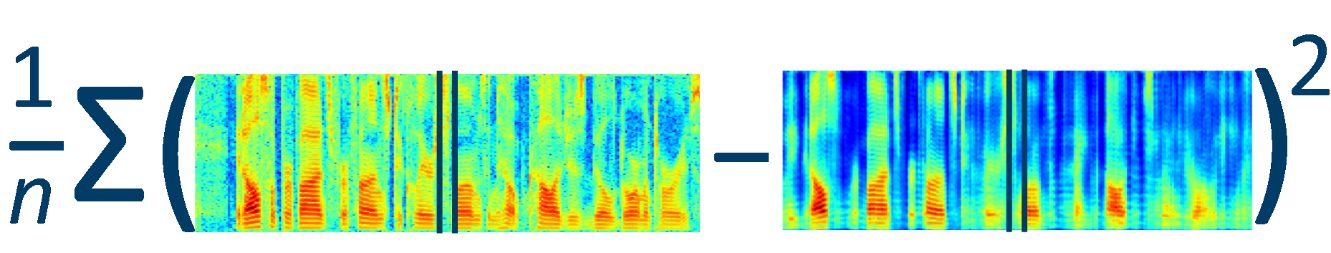

We define our loss function therefore as the difference between clean speech and DNN enhanced speech. More specifically, the loss function is the Mean Squared Error between the log power spectral features of clean and enhanced speech.

By repeatedly exposing the DNN to our training dataset, it will become better and better at minimalizing the loss function for this particular dataset. However, what we really are interested in is how good it works on unseen data (speakers, sentences and noises that it has not trained on, but could encounter in real-life). Therefore, we also need a validation set, which is very much like the training dataset, but contains different sentences from other speakers, which are combined with different background noises. At regular intervals, we check whether the loss function for the validation set is still decreasing. If it no longer does, we stop the training process.

Enhancement stage

Once we are done training, we can start using our DNN to enhance speech. As the DNN’s output is limited to the log-power spectrum of the enhanced signal, we need to reconstruct a waveform by reversing the pre-processing steps. Here we use the phase of the noisy input signal.

Listening examples

There are many tricks and parameters available to tweak the DNN and the training process and these provide a major challenge in achieving the desired results. The purpose of the following listening samples is to show what you can achieve with the relatively standard setup described in this blog. The output is by no means ideal – it’s hard to say whether the ‘enhanced signals’ are easier to understand at all. However, there is at least a clear difference – the DNN is doing something that results in the noise being removed to such a degree that it becomes hard to tell what type of noise it used to be…

| SNR [dB] | Noisy input | Enhanced output |

|---|---|---|

| 15 | ||

| 5 | ||

| -5 |

At SINTEF we’re working to improve upon this baseline, by researching how incorporating our knowledge of human hearing into the selection of features and training techniques affects the speech enhancing performance of DNNs.